Overview: Why AI intelligent workflow optimization matters now

Organizations are under constant pressure to do more with less: faster time-to-resolution for customer requests, lower operational costs for back-office work, and better compliance with evolving regulations. AI intelligent workflow optimization is the discipline of using AI models, orchestration layers, and event systems to redesign business processes so they execute with greater speed, accuracy, and adaptability.

This article breaks that discipline into practical parts for three audiences: beginners who need simple explanations and real-world scenarios, engineers who want architecture and integration patterns, and product leaders who need ROI, vendor trade-offs, and deployment advice.

Beginner section: What it is, told with a story

Imagine an insurance company receiving a flood of claims after a storm. Before AI, a claim moves from intake to verification to payout, with people routing documents, emailing suppliers, and approving exceptions. Each handoff adds delay and cost.

With AI intelligent workflow optimization, a pipeline is built where AI extracts text from photos, classifies damage severity, routes claims to automated verification when confidence is high, and flags ambiguous items for a human review queue. The system learns over time which edge cases need people and which can be closed automatically. Customers get faster payouts and the company reduces manual work.

The important takeaways: automation is not replacing humans entirely, it is optimizing the workflow by marrying AI decisions with orchestration. This makes processes faster, more consistent, and audit-friendly.

Core concepts and components

- Models and inference: ML models (classification, OCR, NER, anomaly detection) are the decision engines.

- Orchestration layer: a workflow engine that sequences tasks, handles retries, branches, and human-in-the-loop steps.

- Eventing and integration: message buses, webhooks, or event streams connect systems and trigger workflows asynchronously.

- Human-in-the-loop: annotation UIs, approval queues, and escalation paths for exceptions.

- Observability: metrics, tracing, model telemetry, and business KPIs that close the feedback loop.

- Governance: data lineage, access controls, model versioning, and compliance controls.

Developer and engineer playbook: Architecture, patterns, and trade-offs

Reference architecture

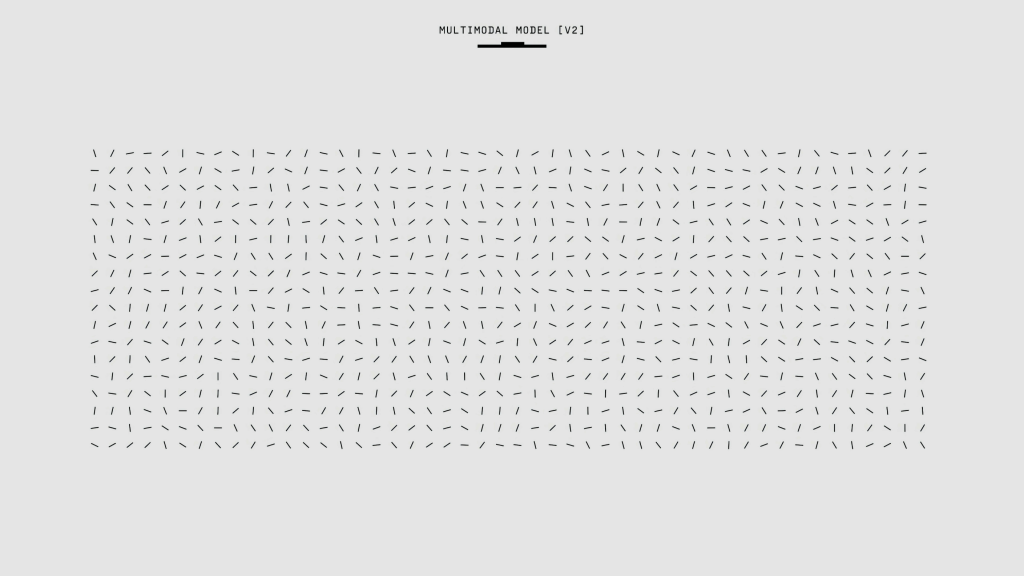

A practical stack typically includes (1) an event ingress layer (API gateway, message broker), (2) an orchestration engine (workflow service), (3) model inference endpoints (managed or self-hosted serving), (4) a human-in-the-loop UI, and (5) observability and governance services. Popular choices include Apache Kafka or AWS EventBridge for events, Temporal or Airflow/Prefect for orchestration, and Triton/BentoML/TorchServe or managed offerings for serving. Ray and Kubeflow remain strong for distributed workloads and training pipelines.

Integration patterns

- Synchronous API calls: use when latency budgets are tight (e.g., chat assistants). Higher operational complexity for scaling model servers and managing timeouts.

- Asynchronous event-driven: better for throughput and resiliency; decouples producers and consumers and enables replay for debugging.

- Orchestrator-managed tasks: the orchestrator owns state and checkpointing. Good for multi-step, long-running processes with human reviews.

- Serverless connectors: useful for unpredictable bursts, but watch cold starts and vendor limits.

API design and contract patterns

Design APIs with clear contracts between orchestration and model services: define input schemas, confidence thresholds, and retry semantics. Adopt idempotent operations and correlation IDs to track events across services. Expose model metadata (model id, version, input preprocessing) with responses to simplify auditing.

Scaling and deployment choices

Choose managed services when you need faster time-to-market and less ops burden; choose self-hosting when cost per inference or data residency rules demand it. For latency-sensitive workflows consider colocating model servers with orchestrator components or using edge-serving for remote locations. Horizontally scale inference by batching or autoscaling replicas; for GPUs, use model parallelism and multi-instance serving frameworks.

Observability and failure modes

Track both system and model signals. System metrics: request latency percentiles, throughput, error rates, queue lengths, task backlogs. Model metrics: confidence distributions, prediction latency, input feature distributions, data drift, and downstream business metrics such as false positives cost. Implement alerting for SLO breaches and automatic rollback mechanisms for bad model versions.

Security and governance

Encrypt data in transit and at rest, apply least-privilege roles for model access, and vet third-party model providers for supply chain risk. Build access logs, model explainability traces, and data lineage to comply with regulations like the EU AI Act or industry-specific rules (HIPAA for health, GLBA for finance). Mitigate prompt injection and adversarial inputs through input validation, sandboxing, and human review gates.

Product and industry perspective: ROI, vendor choices, and case studies

ROI drivers and KPIs

Primary ROI often comes from reduced manual handling time, faster cycle times, and error reduction. Measure automation rate (percentage of cases auto-completed), average handling time before and after, human review load, and business outcomes like fraud detection improvement or revenue retention.

Vendor vs open-source trade-offs

- Managed platform vendors: e.g., UiPath for RPA with AI integrations, Microsoft Power Automate with Copilot features, or cloud-native offerings from AWS and Google. Pros: faster setup, integrated observability, managed scaling. Cons: cost at scale, less control over model internals, potential vendor lock-in.

- Open-source and self-hosted: Airflow/Prefect/Temporal for orchestration, Ray for distributed execution, and Hugging Face model hubs for models. Pros: flexibility, cost control, customizability. Cons: higher ops burden and upfront engineering investment.

Case studies

Financial services: A bank combined automated KYC document extraction with a Temporal-based orchestrator. Result: 60% reduction in manual review hours and 40% faster onboarding. Lessons: invest in confidence thresholds and escalation rules to manage risk.

Customer support: A SaaS vendor layered intent classification and retrieval-augmented generation for first-touch responses, routing complex tickets to humans with prefilled context. Result: first response time dropped by 70% and CSAT rose. Lessons: monitor hallucination risk and include clear “human escalation” points.

Manufacturing: Predictive maintenance workflows used sensor streams and anomaly models to trigger inspection workflows. Result: prevented several critical failures and reduced downtime. Lessons: ensure tight integration between eventing layers and field devices; latency between detection and action matters.

Practical implementation playbook (step-by-step in prose)

1. Map the current process and identify repetitive, rule-heavy, or high-latency steps. Quantify the time and cost per step.

2. Define clear success metrics (automation rate, latency reduction, error reduction). Start with a narrow scope — one end-to-end process rather than many partial automations.

3. Choose an orchestration pattern: event-driven for high throughput, orchestrator-managed for complex multi-step flows with human tasks.

4. Select model types and serving architecture. For language tasks consider using large foundation models via managed APIs or self-hosted transformers if data residency is required. For vision tasks choose specialized inference engines.

5. Build human-in-the-loop interfaces early. These accelerate labeling, provide safety nets, and dramatically improve operator trust.

6. Instrument everything: add tracing, SLOs, data drift detectors, and business KPIs. Tune automated thresholds and rollback conditions before broad rollout.

7. Pilot, measure, and iterate. Use feedback from operations to refine models, adjust orchestration flows, and expand automation scope stepwise.

Technical risks and mitigation

- Model drift: detect with input and output distribution checks; retrain or gate models when drift is detected.

- Over-automation: measure user satisfaction; keep low-risk fallbacks and human review percentages adjustable.

- Latency spikes: provision buffer queues and autoscaling, and consider nearline processing for non-real-time tasks.

- Data leakage: review logging practices, redact sensitive fields, and enforce retention policies.

Market signals and technology trends

There’s growing convergence between RPA vendors and ML platforms. Notable ecosystem movement includes advances in orchestration frameworks like Temporal, greater adoption of Ray for distributed workloads, and the proliferation of retrieval-augmented generation patterns. Large-scale AI pretraining remains a foundational trend — models pretrained at scale reduce the amount of supervised data needed for downstream automation tasks.

Research and product work with PaLM in NLP and other large pretrained models have shown strong gains in language understanding, which helps automation use cases such as document understanding and conversational workflows. Be mindful that using large pretrained models often changes cost dynamics: inference costs, latency, and the need for specialized infrastructure increase.

Vendor snapshot and evaluation checklist

When evaluating providers consider:

- How they integrate with your existing event and identity systems.

- Support for human-in-the-loop and audit trails.

- Model governance features: versioning, lineage, explainability.

- Billing model: per-inference vs reserved capacity and how that impacts cost predictability.

- Data residency and compliance support for your industry.

Looking Ahead

AI intelligent workflow optimization will increasingly blend pretraining scale with tighter orchestration. Expect stronger tooling around safe model deployment, automated model selection for workflows, and more opinionated “AI operating systems” that bundle orchestration, model serving, and governance. Standards and regulation — notably the EU AI Act — will shape how automation is adopted in regulated industries and push for more explainability and auditability.

Key Takeaways

Start small, measure rigorously, and choose the right balance between managed and self-hosted platforms. Combine event-driven patterns for throughput with orchestrator-managed flows for complex processes. Instrument models and systems thoroughly to avoid silent failures, and plan for human oversight where business risk is high. Finally, recognize that investments in model infrastructure — including Large-scale AI pretraining foundations and the careful use of models like PaLM in NLP for language-heavy tasks — can accelerate automation, but they also change operational and cost trade-offs.