Why AI city infrastructure monitoring matters

Imagine a mid-size city where a burst water main floods a downtown street at 2 a.m., or a traffic signal failure creates a rush-hour bottleneck. Traditional inspection and manual alerting are slow and expensive. AI city infrastructure monitoring brings together cameras, sensors, and models to detect anomalies, prioritize responses, and predict failures before they happen. For a city administrator, that means faster incident response, lower maintenance cost, and fewer surprises. For residents, it means safer streets, cleaner water, and more reliable services.

Core concepts explained simply

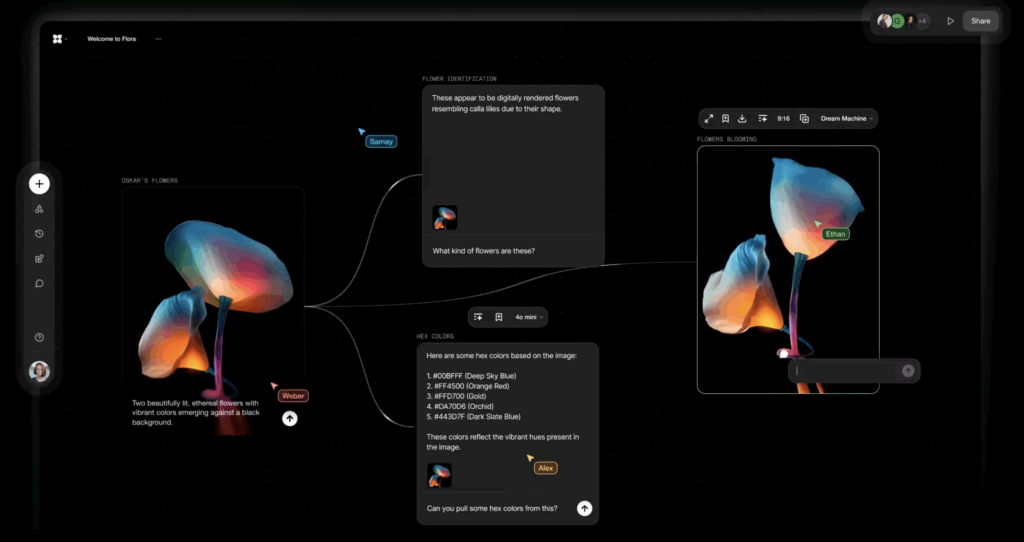

At its heart, AI city infrastructure monitoring is about three layers: sensing, inference, and action. Sensors (video cameras, water pressure gauges, vibration sensors, air-quality monitors) capture raw signals. Models running either at the edge or in the cloud turn those signals into events (flood alert, structural anomaly, suspicious behavior). An orchestration layer maps events to workflows: notify a field crew, lock a valve, adjust traffic timing, or create a ticket in a CMMS (Computerized Maintenance Management System).

Real-world analogy

Think of the system as a city’s nervous system. Sensors are the sensory nerves, the models are the spinal cord interpreting signals, and the orchestration layer is the brain deciding how to act. If any part is weak — noisy sensors, brittle models, or poor workflows — the whole system fails to protect the city.

Architectural patterns and system components

There are repeatable architecture patterns for building robust AI monitoring platforms. Below are the most common layers and choices.

1. Edge vs. cloud for inference

- Edge-first: Low latency, limited bandwidth. Useful for real-time traffic signal adjustments or on-camera person detection. Runs on devices like NVIDIA Jetson, Intel Movidius, or city-owned mini-servers.

- Cloud-first: Centralized heavy analytics and historical correlation. Better for long-term predictive maintenance, trend analysis, or models that require large context and compute.

- Hybrid: Short-term inference at the edge, aggregated insights and model retraining in the cloud. Most practical city deployments are hybrid.

2. Messaging and event buses

Event-driven designs use streams to decouple producers (sensors) from consumers (models, dashboards, actions). Kafka and Pulsar are common choices for city scale. They allow replay of events for debugging and retraining, and support topics per domain (traffic, water, power).

3. Model serving and orchestration

Model deployers vary: lightweight model servers on edge devices, or scalable inference clusters in Kubernetes. Managed options like NVIDIA Triton, KServe, Ray Serve, and Cloud vendor offerings handle autoscaling, batching, and multi-model endpoints. When you need to orchestrate multi-step tasks — e.g., run a person-detector, then a tracking model, and finally a policy engine — you need an orchestration layer that can chain services and manage retries and state.

Integration patterns and API design

Good integration is what makes an AI monitoring project operationally viable.

API contracts and versioning

Expose model outputs as typed events with clear schemas (for example, event type, confidence, bounding box, timestamp, device_id, location). Use schema registries (Avro/Protobuf) to ensure backward compatibility. Design REST or gRPC endpoints for synchronous queries (on-demand camera snapshots) and stream-based APIs for continuous feeds. Always include model metadata — version, training data hashes, and performance metrics — in the API responses for traceability.

Integration patterns

- Synchronous API: Quick snapshots and manual queries, useful for dashboards and on-demand playback.

- Event-driven: Sensors emit events to a stream; downstream consumers process, filter, and enrich. This is more scalable for continuous monitoring.

- Command-and-control: Orchestration service issues actions to actuators (traffic lights, valves) with guaranteed delivery and audit trails.

Deep learning model deployers and MLOps

Production readiness requires mature Deep learning model deployers and an MLOps pipeline. Deployers differ in how they handle batching, GPU utilization, model warming, and version routing.

Key operational features to evaluate:

- Model warmup and cold-start latency. Real-time city applications need predictable latency.

- Batching strategies for throughput vs latency trade-offs.

- Multi-model hosting and routing based on device/location.

- Canarying, rolling updates, and rollback semantics integrated with CI/CD.

Hardware choices and AI-optimized processors

Choosing compute hardware influences cost, power, and latency. AI-optimized processors — from NVIDIA A100 and Jetson families to Google TPU and Intel Habana — provide different profiles:

- Edge accelerators (Jetson, Movidius): low power, suitable for per-camera inference.

- City data centers (NVIDIA T4/A100, Google TPU, Graphcore): good for bulk retraining and high-throughput inference.

- Specialized ASICs: can lower long-term operational cost but require tighter vendor lock-in.

Consider power constraints, heat, physical security, and model compatibility (TensorRT, OpenVINO) when selecting hardware.

Deployment and scaling considerations

Scaling an AI monitoring system for a city is not the same as scaling a single web app. Common patterns and trade-offs:

- Federated deployment: Each district has local compute and storage to continue operating when network links fail.

- Autoscaling vs. reserved capacity: For predictable peak loads (rush hour), reserved capacity plus autoscaling reduces cold-start risk.

- Bandwidth and cost: Streaming raw video to the cloud is expensive. Consider on-device compression, event-only uploads, or selective recording.

- Model lifecycle: Continuous retraining requires pipelines for labeling, validation, and safe rollout to prevent regression in safety-critical flows.

Observability, monitoring signals, and failure modes

Observability must cover both infra and model-level signals. Typical metrics:

- Infrastructure: CPU/GPU utilization, network throughput, device heartbeat, power draw.

- Model performance: latency percentiles (p50/p90/p99), throughput (FPS/inferences/sec), accuracy drift, false positive/negative rates.

- Business KPIs: incident detection lead time, mean time to repair, number of false alerts per week.

Monitoring tools like Prometheus, Grafana, Elastic, and Datadog are commonly used, augmented with model-validation dashboards that track concept drift and data distribution shifts. Failure modes to watch for include: sensor degradation, network partitions, model skew, and unhandled edge cases leading to alert storms.

Security, privacy, and governance

Cities handle sensitive resident data. Governance considerations are core requirements, not optional extras.

- Data minimization: avoid storing raw video when possible; use derived events and aggregated metrics.

- Encryption in transit and at rest; hardware TPMs for tamper resistance on edge devices.

- Access control: role-based access, audited APIs, signed model artifacts, and RBAC for deployment pipelines.

- Compliance: GDPR, local privacy laws, and procurement policies that affect data residency and vendor selection.

- Ethics and bias: independent audits of models used for enforcement decisions; appeal processes and clear logging.

Operational challenges and common pitfalls

Practical deployments often stumble on integration gaps rather than model accuracy. Common pitfalls include:

- Poorly instrumented field devices that fail without clear diagnostics.

- Underestimating data labeling effort for edge cases like construction zones or seasonal changes.

- Lack of cross-team ownership: the platform team builds pipelines, but operations, maintenance, and policy teams must be aligned.

- Overfitting to a narrow geography or a particular camera angle, causing models to fail quickly when expanded citywide.

Vendor choices and market landscape

Vendors range from cloud providers (AWS IoT/Lookout, Google Cloud IoT, Azure IoT) to specialized platform players and open-source stacks. Managed offerings reduce operational burden but can be costlier and create vendor lock-in. Self-hosted stacks (Kubernetes + KServe/Triton + Kafka + Prometheus) require skilled SRE and MLOps teams. Hybrid approaches often use managed control planes but self-host edge fleets.

Open-source projects and standards to consider: Kube, KServe, Ray, OpenVINO, NVIDIA DeepStream, EdgeX Foundry, and the NIST AI Risk Management Framework. Recent product moves—like cloud providers offering on‑prem control planes or edge device management—reflect market demand for hybrid solutions.

ROI and case snapshots

ROI for city projects can be measured in reduced emergency response times, avoided asset replacements via predictive maintenance, and improved citizen satisfaction. Examples:

- Traffic optimization: A pilot where adaptive signal control reduced average commute time by 6% and emergency response delays by 12%.

- Water infrastructure: Predictive leak detection lowered emergency repairs by 30% and water loss by a measurable percentage.

- Bridge maintenance: Vibration-based anomaly detection led to targeted inspections that deferred expensive full-deck replacement.

When presenting ROI, include total cost of ownership: sensors, installation, networking, compute, labeling, and ongoing model management.

Future outlook and trends

Expect continued moves toward decentralized intelligence, better support for federated learning to preserve privacy, and more efficient model runtimes for AI-optimized processors. Standards around model explainability and procurement will help cities adopt at scale. Emerging open-source agent frameworks and orchestration layers will make it easier to compose multi-step automations like incident triage pipelines.

Implementation playbook (step-by-step in prose)

- Define measurable outcomes: decide which incidents or degradations you will detect and the KPI improvement targets.

- Run a data collection pilot: instrument a small area with varied sensors and collect labeled data for 4–12 weeks.

- Choose an architecture: edge-first if latency-critical, hybrid for scale and cost balance.

- Select deployers and hardware: evaluate Deep learning model deployers and AI-optimized processors based on latency, throughput, and power profile.

- Build pipelines: ingestion, model training, validation, canary rollout, and a rollback plan tied to business KPIs.

- Operationalize: implement observability, runbooks, and cross-team SLAs with field crews and legal/privacy teams.

- Scale gradually: expand geography, refine models, and instrument for drift detection and retraining cadence.

Key Takeaways

AI city infrastructure monitoring is achievable and valuable when approached as a systems engineering problem: sensors, models, orchestration, and governance all matter equally.

Successful projects balance edge and cloud, choose the right deployers and processors for the workload, and invest in observability and governance early. With careful design, cities can move from reactive maintenance to predictive, cost-effective, and privacy-aware operations.